Hello,

I have the following configuration for CESM2.1.3 on Canadian clusters:

config machines:

config batch:

I want to know how you define the minimum memory when you submit your jobs.

Because when I do the following, it doesn't capture the minimum memory in the actual run, it only picks it up for the sbatch job, and not the real simulation:

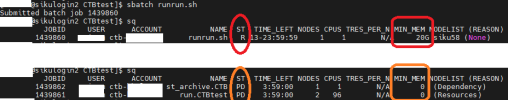

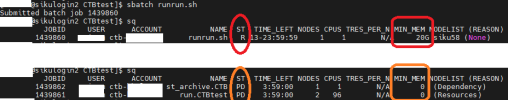

The reason I am asking for this minimum cpu option is that I am going to use a ctb allocation on my system, that if you assign a min mem for it, it will start the run immediately, just like I showed you above in the picture when the MIN_MEM is assigned in the runrun.sh file with 20G, then by running the bash file, it started it immediately (red), but then when it reached case.submit command, it went to pending (orange), and took a long, long time to start.

So, I dont know how to assign this MIN_MEM (even though I did set <directive>--mem=20G </directive> in the config_batch file), but somehow it doesnt work with that and ignores that 20G. And only when I set it in #SBATCH --mem-per-cpu=20G it will consider it, but only for the bash file itself and not the case.submit though.

I would be grateful if you let me know how you do it, as I need to use this ctb allocation I got, and it will expedite my runs tremendously.

Thank you so much for your support.

I have the following configuration for CESM2.1.3 on Canadian clusters:

config machines:

Code:

<?xml version="1.0"?>

<config_machines version="2.0">

<machine MACH="siku">

<DESC>https://wiki.ace-net.ca/wiki/Siku</DESC>

<NODENAME_REGEX>.*.siku.ace-net.ca</NODENAME_REGEX>

<OS>LINUX</OS>

<COMPILERS>intel,gnu</COMPILERS>

<MPILIBS>openmpi</MPILIBS>

<PROJECT>ctb-XXXX</PROJECT>

<CHARGE_ACCOUNT>ctb-XXXX</CHARGE_ACCOUNT>

<CIME_OUTPUT_ROOT>/scratch/$USER/cesm/output</CIME_OUTPUT_ROOT>

<DIN_LOC_ROOT>/scratch/$USER/cesm/inputdata</DIN_LOC_ROOT>

<DIN_LOC_ROOT_CLMFORC>${DIN_LOC_ROOT}/atm/datm7</DIN_LOC_ROOT_CLMFORC>

<DOUT_S_ROOT>$CIME_OUTPUT_ROOT/archive/case</DOUT_S_ROOT>

<GMAKE>make</GMAKE>

<GMAKE_J>8</GMAKE_J>

<BATCH_SYSTEM>slurm</BATCH_SYSTEM>

<SUPPORTED_BY>support@ace-net.ca</SUPPORTED_BY>

<MAX_TASKS_PER_NODE>48</MAX_TASKS_PER_NODE>

<MAX_MPITASKS_PER_NODE>48</MAX_MPITASKS_PER_NODE>

<PROJECT_REQUIRED>TRUE</PROJECT_REQUIRED>

<mpirun mpilib="openmpi">

<executable>mpirun</executable>

<arguments>

<arg name="anum_tasks"> -np {{ total_tasks }}</arg>

</arguments>

</mpirun>

<module_system type="module" allow_error="true">

<init_path lang="perl">/cvmfs/soft.computecanada.ca/custom/software/lmod/lmod/init/perl</init_path>

<init_path lang="python">/cvmfs/soft.computecanada.ca/custom/software/lmod/lmod/init/env_modules_python.py</init_path>

<init_path lang="csh">/cvmfs/soft.computecanada.ca/custom/software/lmod/lmod/init/csh</init_path>

<init_path lang="sh">/cvmfs/soft.computecanada.ca/custom/software/lmod/lmod/init/sh</init_path>

<cmd_path lang="perl">/cvmfs/soft.computecanada.ca/custom/software/lmod/lmod/libexec/lmod perl</cmd_path>

<cmd_path lang="python">/cvmfs/soft.computecanada.ca/custom/software/lmod/lmod/libexec/lmod python</cmd_path>

<cmd_path lang="csh">module</cmd_path>

<cmd_path lang="sh">module</cmd_path>

<modules>

<command name="purge"/>

<command name="load">StdEnv/2023</command>

</modules>

<modules compiler="intel">

<command name="load">intel/2023.2.1</command>

<command name="load">git-annex/10.20231129</command>

<command name="load">cmake/3.27.7</command>

</modules>

<modules mpilib="openmpi">

<command name="load">openmpi/4.1.5</command>

<command name="load">hdf5-mpi/1.14.2</command>

<command name="load">netcdf-c++4-mpi/4.3.1</command>

<command name="load">netcdf-fortran-mpi/4.6.1</command>

<command name="load">netcdf-mpi/4.9.2</command>

<command name="load">xml-libxml/2.0208</command>

<command name="load">flexiblas/3.3.1</command>

</modules>

</module_system>

<environment_variables>

<env name="NETCDF_PATH">/cvmfs/soft.computecanada.ca/easybuild/software/2023/x86-64-v4/MPI/intel2023/openmpi4/pnetcdf/1.12.3</env>

<env name="NETCDF_FORTRAN_PATH">/cvmfs/soft.computecanada.ca/easybuild/software/2023/x86-64-v4/MPI/intel2023/openmpi4/netcdf-fortran-mpi/4.6.1/</env>

<env name="NETCDF_C_PATH">/cvmfs/soft.computecanada.ca/easybuild/software/2023/x86-64-v4/MPI/intel2023/openmpi4/netcdf-c++4-mpi/4.3.1/</env>

<env name="NETLIB_LAPACK_PATH">/cvmfs/soft.computecanada.ca/easybuild/software/2023/x86-64-v3/Core/imkl/2023.2.0/mkl/2023.2.0/</env>

<env name="OMP_STACKSIZE">256M</env>

<env name="I_MPI_CC">icc</env>

<env name="I_MPI_FC">ifort</env>

<env name="I_MPI_F77">ifort</env>

<env name="I_MPI_F90">ifort</env>

<env name="I_MPI_CXX">icpc</env>

</environment_variables>

<resource_limits>

<resource name="RLIMIT_STACK">300000000</resource>

</resource_limits>

</machine>

</config_machines>config batch:

Code:

<?xml version="1.0"?>

<config_batch version="2.1">

<batch_system type="slurm" MACH="siku">

<batch_submit>sbatch</batch_submit>

<batch_cancel>scancel</batch_cancel>

<batch_directive>#SBATCH</batch_directive>

<jobid_pattern>(\d+)$</jobid_pattern>

<depend_string> --dependency=afterok:jobid</depend_string>

<depend_allow_string> --dependency=afterany:jobid</depend_allow_string>

<depend_separator>,</depend_separator>

<batch_mail_flag>--mail-user</batch_mail_flag>

<batch_mail_type_flag>--mail-type</batch_mail_type_flag>

<batch_mail_type>none, all, begin, end, fail</batch_mail_type>

<submit_args>

<arg flag="--time" name="$JOB_WALLCLOCK_TIME"/>

<arg flag="--account" name="$CHARGE_ACCOUNT"/>

</submit_args>

<directives>

<directive>--job-name={{ job_id }}</directive>

<directive>--nodes={{ num_nodes }}</directive>

<directive>--ntasks-per-node={{ tasks_per_node }}</directive>

<directive>--output={{ job_id }}</directive>

<directive>--exclusive</directive>

<directive>--mem=20G </directive>

</directives>

<!-- Unknown queues use the batch directives for the regular queue -->

<unknown_queue_directives>regular</unknown_queue_directives>

<queues>

<queue walltimemax="12:00:00" nodemin="1" nodemax="42">siku</queue>

</queues>

</batch_system>

</config_batch>I want to know how you define the minimum memory when you submit your jobs.

Because when I do the following, it doesn't capture the minimum memory in the actual run, it only picks it up for the sbatch job, and not the real simulation:

Code:

#!/bin/bash

#SBATCH --mem-per-cpu=20G

./case.submit --mail-user MYEMAIL@gmail.com --mail-type all

The reason I am asking for this minimum cpu option is that I am going to use a ctb allocation on my system, that if you assign a min mem for it, it will start the run immediately, just like I showed you above in the picture when the MIN_MEM is assigned in the runrun.sh file with 20G, then by running the bash file, it started it immediately (red), but then when it reached case.submit command, it went to pending (orange), and took a long, long time to start.

So, I dont know how to assign this MIN_MEM (even though I did set <directive>--mem=20G </directive> in the config_batch file), but somehow it doesnt work with that and ignores that 20G. And only when I set it in #SBATCH --mem-per-cpu=20G it will consider it, but only for the bash file itself and not the case.submit though.

I would be grateful if you let me know how you do it, as I need to use this ctb allocation I got, and it will expedite my runs tremendously.

Thank you so much for your support.