wangsz@fio_org_cn

New Member

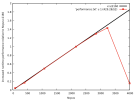

After I upgrade the sea ice component of CESM1.2.1 from CICE4 to CICE6 (and keep other components untouched), the computation became much slower (20 model years per wall time day vs. 35 model years before the upgrade). According to the timming information, the sea ice component is the bottle neck. I wonder if this is a normal situation for CICE6? I did not find online info about computation speed comparision among CICE versions.

I am using the f19_g16 grid on my own server.

Thanks in advance.

I am using the f19_g16 grid on my own server.

Thanks in advance.