I'm trying to run the model (clm5) with res f19_g16 and compset I1850Clm50BgcCropG on Niagara cluster (Toronto). It works using ./case.submit.

However, I would like to submit a job using submission script (MPI): Niagara Quickstart - Alliance Doc. I'm using the following bash-script. The model does start running, but it seems that it goes forever! I mean, I set a e.g., 6h walltime, 10 nodes (and also a rang of 2 to 10 was tested with different walltimes -- max is 24-h) for a test-case modelling at a coarse resolution (~200km) at monthly scale for 24 timesteps only.

Running with ./case.submit takes around 1 hour to terminate the model run successfully with producing the model outputs; however, using the following bash-script takes forever and doesn't write any outputs, and finally it gives error: cancelled due to time limit.

My question is: is the model actually running? what's the problem?

Any suggestions please?

Thank you.

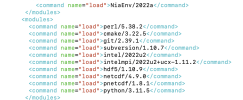

### the following modules are used and loaded in the config_machines.xml file:

######################

#!/bin/bash

#SBATCH --nodes=10

#SBATCH --ntasks=400

#SBATCH --time=6:00:00

#SBATCH --job-name msol_run_job

#SBATCH --output=mpirun_job_output_%j.txt

#SBATCH --mail-type=FAIL

#SBATCH --partition=compute

cd /scratch/..../runs/RUN_cesm2.1.3_11850Clm50BgcCropG_f19_g16_200km/bld

mpirun ./cesm.exe

### or ###

mpirun -np 400 /scratch/..../runs/RUN_cesm2.1.3_11850Clm50BgcCropG_f19_g16_200km/bld/cesm.exe >> cesm.log.$LID 2>&1

##############################

##############################

slurmstepd: error: *** JOB 12346976 ON nia0634 CANCELLED AT 2024-03-13T05:06:14 DUE TO TIME LIMIT ***

scontrol show job 12346976

JobId=12346976 JobName=msol_run_job

UserId=msol(3131954) GroupId=cgf(6006293) MCS_label=N/A

Priority=2159090 Nice=0 Account=rrg-cgf QOS=normal

JobState=TIMEOUT Reason=TimeLimit Dependency=(null)

Requeue=0 Restarts=0 BatchFlag=1 Reboot=0 ExitCode=0:15

RunTime=06:00:25 TimeLimit=06:00:00 TimeMin=N/A

SubmitTime=2024-03-12T23:05:39 EligibleTime=2024-03-12T23:05:39

AccrueTime=2024-03-12T23:05:39

StartTime=2024-03-12T23:05:49 EndTime=2024-03-13T05:06:14 Deadline=N/A

SuspendTime=None SecsPreSuspend=0 LastSchedEval=2024-03-12T23:05:49 Scheduler=Main

Partition=compute AllocNode:Sid=nia-login06:200340

ReqNodeList=(null) ExcNodeList=(null)

NodeList=nia[0634-0643]

BatchHost=nia0634

NumNodes=10 NumCPUs=800 NumTasks=400 CPUs/Task=1 ReqB:S:C:T=0:0:*:*

ReqTRES=cpu=400,mem=1750000M,node=10,billing=200

AllocTRES=cpu=800,mem=1750000M,node=10,billing=400

Socks/Node=* NtasksPerN:B:S:C=0:0:*:* CoreSpec=*

MinCPUsNode=1 MinMemoryNode=175000M MinTmpDiskNode=0

Features=[skylake|cascade] DelayBoot=00:00:00

OverSubscribe=NO Contiguous=0 Licenses=(null) Network=(null)

Command=/gpfs/fs0/scratch/c/cgf/msol/RUN_cesm2.1.3_11850Clm50BgcCropG_f19_g16_200km/submit_job.sh

WorkDir=/gpfs/fs0/scratch/c/cgf/msol/RUN_cesm2.1.3_11850Clm50BgcCropG_f19_g16_200km

Comment=/opt/slurm/bin/sbatch --export=NONE submit_job.sh

StdErr=/gpfs/fs0/scratch/c/cgf/msol/RUN_cesm2.1.3_11850Clm50BgcCropG_f19_g16_200km/mpirun_job_output_12346976.txt

StdIn=/dev/null

StdOut=/gpfs/fs0/scratch/c/cgf/msol/RUN_cesm2.1.3_11850Clm50BgcCropG_f19_g16_200km/mpirun_job_output_12346976.txt

Power=

sacct -j 12346976

JobID JobName Account Elapsed MaxVMSize MaxRSS SystemCPU UserCPU ExitCode

------------ ---------- ---------- ---------- ---------- ---------- ---------- ---------- --------

12346976 msol_run_+ rrg-cgf 06:00:25 00:05.383 00:14.038 0:0

12346976.ba+ batch rrg-cgf 06:00:28 818460K 13584K 00:02.138 00:05.853 0:15

12346976.ex+ extern rrg-cgf 06:00:25 148744K 1084K 00:00.004 00:00.004 0:0

12346976.0 hydra_bst+ rrg-cgf 06:00:15 233307000K 7569376K 00:03.240 00:08.180 5:0

kernel messages produced during job executions:

[Mar13 01:19] CIFS PidTable: buckets 64

[ +0.005947] CIFS BufTable: buckets 64

###############################################

However, I would like to submit a job using submission script (MPI): Niagara Quickstart - Alliance Doc. I'm using the following bash-script. The model does start running, but it seems that it goes forever! I mean, I set a e.g., 6h walltime, 10 nodes (and also a rang of 2 to 10 was tested with different walltimes -- max is 24-h) for a test-case modelling at a coarse resolution (~200km) at monthly scale for 24 timesteps only.

Running with ./case.submit takes around 1 hour to terminate the model run successfully with producing the model outputs; however, using the following bash-script takes forever and doesn't write any outputs, and finally it gives error: cancelled due to time limit.

My question is: is the model actually running? what's the problem?

Any suggestions please?

Thank you.

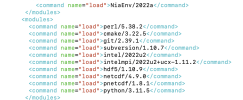

### the following modules are used and loaded in the config_machines.xml file:

######################

#!/bin/bash

#SBATCH --nodes=10

#SBATCH --ntasks=400

#SBATCH --time=6:00:00

#SBATCH --job-name msol_run_job

#SBATCH --output=mpirun_job_output_%j.txt

#SBATCH --mail-type=FAIL

#SBATCH --partition=compute

cd /scratch/..../runs/RUN_cesm2.1.3_11850Clm50BgcCropG_f19_g16_200km/bld

mpirun ./cesm.exe

### or ###

mpirun -np 400 /scratch/..../runs/RUN_cesm2.1.3_11850Clm50BgcCropG_f19_g16_200km/bld/cesm.exe >> cesm.log.$LID 2>&1

##############################

##############################

slurmstepd: error: *** JOB 12346976 ON nia0634 CANCELLED AT 2024-03-13T05:06:14 DUE TO TIME LIMIT ***

scontrol show job 12346976

JobId=12346976 JobName=msol_run_job

UserId=msol(3131954) GroupId=cgf(6006293) MCS_label=N/A

Priority=2159090 Nice=0 Account=rrg-cgf QOS=normal

JobState=TIMEOUT Reason=TimeLimit Dependency=(null)

Requeue=0 Restarts=0 BatchFlag=1 Reboot=0 ExitCode=0:15

RunTime=06:00:25 TimeLimit=06:00:00 TimeMin=N/A

SubmitTime=2024-03-12T23:05:39 EligibleTime=2024-03-12T23:05:39

AccrueTime=2024-03-12T23:05:39

StartTime=2024-03-12T23:05:49 EndTime=2024-03-13T05:06:14 Deadline=N/A

SuspendTime=None SecsPreSuspend=0 LastSchedEval=2024-03-12T23:05:49 Scheduler=Main

Partition=compute AllocNode:Sid=nia-login06:200340

ReqNodeList=(null) ExcNodeList=(null)

NodeList=nia[0634-0643]

BatchHost=nia0634

NumNodes=10 NumCPUs=800 NumTasks=400 CPUs/Task=1 ReqB:S:C:T=0:0:*:*

ReqTRES=cpu=400,mem=1750000M,node=10,billing=200

AllocTRES=cpu=800,mem=1750000M,node=10,billing=400

Socks/Node=* NtasksPerN:B:S:C=0:0:*:* CoreSpec=*

MinCPUsNode=1 MinMemoryNode=175000M MinTmpDiskNode=0

Features=[skylake|cascade] DelayBoot=00:00:00

OverSubscribe=NO Contiguous=0 Licenses=(null) Network=(null)

Command=/gpfs/fs0/scratch/c/cgf/msol/RUN_cesm2.1.3_11850Clm50BgcCropG_f19_g16_200km/submit_job.sh

WorkDir=/gpfs/fs0/scratch/c/cgf/msol/RUN_cesm2.1.3_11850Clm50BgcCropG_f19_g16_200km

Comment=/opt/slurm/bin/sbatch --export=NONE submit_job.sh

StdErr=/gpfs/fs0/scratch/c/cgf/msol/RUN_cesm2.1.3_11850Clm50BgcCropG_f19_g16_200km/mpirun_job_output_12346976.txt

StdIn=/dev/null

StdOut=/gpfs/fs0/scratch/c/cgf/msol/RUN_cesm2.1.3_11850Clm50BgcCropG_f19_g16_200km/mpirun_job_output_12346976.txt

Power=

sacct -j 12346976

JobID JobName Account Elapsed MaxVMSize MaxRSS SystemCPU UserCPU ExitCode

------------ ---------- ---------- ---------- ---------- ---------- ---------- ---------- --------

12346976 msol_run_+ rrg-cgf 06:00:25 00:05.383 00:14.038 0:0

12346976.ba+ batch rrg-cgf 06:00:28 818460K 13584K 00:02.138 00:05.853 0:15

12346976.ex+ extern rrg-cgf 06:00:25 148744K 1084K 00:00.004 00:00.004 0:0

12346976.0 hydra_bst+ rrg-cgf 06:00:15 233307000K 7569376K 00:03.240 00:08.180 5:0

kernel messages produced during job executions:

[Mar13 01:19] CIFS PidTable: buckets 64

[ +0.005947] CIFS BufTable: buckets 64

###############################################