It looks like this is dying in the initialization of the ocean model, based on the last output in the cpl.log file, but it's hard to tell what's going wrong here. Have you successfully run any other CESM configurations on this machine?

One possible problem is that there isn't enough memory for this one-degree fully-coupled run when you run on just 64 processors. Are you able to run on more processors? If so, I would try that. We also typically use a processor layout where the ocean is on different processors from the other components - i.e., the ocean runs concurrently. (See

5. Controlling processors and threads — CIME master documentation for some information on this.) A reasonable starting point would be the out-of-the-box processor layout for this resolution, which has:

Code:

Pes setting: tasks is {'NTASKS_ATM': -8, 'NTASKS_LND': -4, 'NTASKS_ROF': -4, 'NTASKS_ICE': -4, 'NTASKS_OCN': -1, 'NTASKS_GLC': -8, 'NTASKS_WAV': -8, 'NTASKS_CPL': -8}

Pes setting: threads is {'NTHRDS_ATM': 1, 'NTHRDS_LND': 1, 'NTHRDS_ROF': 1, 'NTHRDS_ICE': 1, 'NTHRDS_OCN': 1, 'NTHRDS_GLC': 1, 'NTHRDS_WAV': 1, 'NTHRDS_CPL': 1}

Pes setting: rootpe is {'ROOTPE_ATM': 0, 'ROOTPE_LND': 0, 'ROOTPE_ROF': 0, 'ROOTPE_ICE': -4, 'ROOTPE_OCN': -8, 'ROOTPE_GLC': 0, 'ROOTPE_WAV': 0, 'ROOTPE_CPL': 0}

(where negative numbers mean number of full nodes).

Another thing you could try is a simpler configuration, in terms of compset and/or resolution. For example, it would be interesting to see if an ocean-only configuration works (e.g., compset C, resolution T62_g37 for a lower-resolution ocean run, and then T62_g17 for a higher-resolution ocean run). An alternative / additional thing to try would be a lower-resolution atmosphere and land, such as BHIST with resolution f19_g17 (so roughly 2-degree atm/lnd instead of 1 degree).

Thank you Bill!

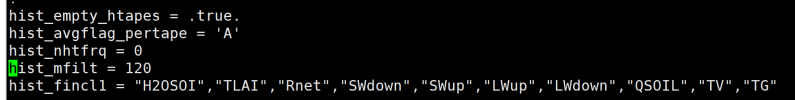

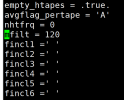

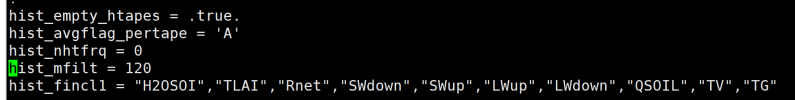

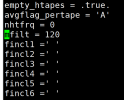

I've run F2000climo and FHIST successfully before. But when I submit FHIST, it appeared warning and error like the figure1. There is output after running it except ocn, but I'm not sure if the above affects the results. Befor submitting, I changed namelist for clm and cam to control output(figure 2,3). Other settings are shown below:

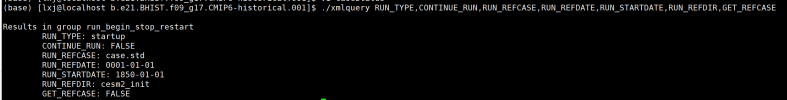

Results in group run_begin_stop_restart

RUN_TYPE: startup

CONTINUE_RUN: FALSE

RUN_REFCASE: case.std

RUN_REFDATE: 0001-01-01

RUN_STARTDATE: 1897-01-01

RUN_REFDIR: cesm2_init

GET_REFCASE: FALSE

Results in group run_begin_stop_restart

STOP_N: 5

STOP_OPTION: ndays

RESUBMIT: 0

Results in group mach_pes

NTASKS: ['CPL:64', 'ATM:64', 'LND:64', 'ICE:64', 'OCN:64', 'ROF:64', 'GLC:64', 'WAV:64', 'IAC:64', 'ESP:64']

NTHRDS: ['CPL:1', 'ATM:1', 'LND:1', 'ICE:1', 'OCN:1', 'ROF:1', 'GLC:1', 'WAV:1', 'IAC:1', 'ESP:1']

ROOTPE: ['CPL:0', 'ATM:0', 'LND:0', 'ICE:0', 'OCN:0', 'ROF:0', 'GLC:0', 'WAV:0', 'IAC:0', 'ESP:0']

DOUT_S: TRUE

For the BHIST, unfortunately, this is the only processor I have and maybe I would try BHIST with lower resolution later.

Actually, I think maybe F compset is more suitable for me because I'm going to research land-atmosphere coupling in the future.

Thank you again Bill, you really help me a lot!