I was able to load the modules properly I am running into thee following error now when I try to do a ./case.build after creating a new case

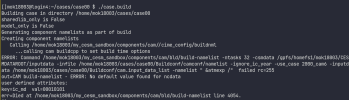

[]mok18003@login6:~/cases/case01 $ ./case.build

Building case in directory /home/mok18003/cases/case01

sharedlib_only is False

model_only is False

Generating component namelists as part of build

Creating component namelists

Calling /home/mok18003/my_cesm_sandbox/components/cam//cime_config/buildnml

...calling cam buildcpp to set build time options

CAM namelist copy: file1 /home/mok18003/cases/case01/Buildconf/camconf/atm_in file2 /gpfs/homefs1/mok18003/scratch/case01/run/atm_in

Calling /home/mok18003/my_cesm_sandbox/components/clm//cime_config/buildnml

Calling /home/mok18003/my_cesm_sandbox/components/cice//cime_config/buildnml

...calling cice buildcpp to set build time options

File not found: grid_file = "/gpfs/homefs1/mok18003/scratch/inputdata/ocn/pop/gx1v6/grid/horiz_grid_20010402.ieeer8", will attempt to download in check_input_data phase

File not found: kmt_file = "/gpfs/homefs1/mok18003/scratch/inputdata/ocn/pop/gx1v6/grid/topography_20090204.ieeei4", will attempt to download in check_input_data phase

File not found: ice_ic = "/gpfs/homefs1/mok18003/scratch/inputdata/ice/cice/b.e15.B1850G.f09_g16.pi_control.25.cice.r.0041-01-01-00000.nc", will attempt to download in check_input_data phase

Calling /home/mok18003/my_cesm_sandbox/components/pop//cime_config/buildnml

...calling pop buildcpp to set build time options

Traceback (most recent call last):

File "/home/mok18003/cases/case01/./case.build", line 147, in <module>

_main_func(__doc__)

File "/home/mok18003/cases/case01/./case.build", line 140, in _main_func

success = build.case_build(caseroot, case=case, sharedlib_only=sharedlib_only,

File "/home/mok18003/my_cesm_sandbox/cime/scripts/Tools/../../scripts/lib/CIME/build.py", line 570, in case_build

return run_and_log_case_status(functor, "case.build", caseroot=caseroot)

File "/home/mok18003/my_cesm_sandbox/cime/scripts/Tools/../../scripts/lib/CIME/utils.py", line 1634, in run_and_log_case_status

rv = func()

File "/home/mok18003/my_cesm_sandbox/cime/scripts/Tools/../../scripts/lib/CIME/build.py", line 568, in <lambda>

functor = lambda: _case_build_impl(caseroot, case, sharedlib_only, model_only, buildlist,

File "/home/mok18003/my_cesm_sandbox/cime/scripts/Tools/../../scripts/lib/CIME/build.py", line 511, in _case_build_impl

sharedpath = _build_checks(case, build_threaded, comp_interface,

File "/home/mok18003/my_cesm_sandbox/cime/scripts/Tools/../../scripts/lib/CIME/build.py", line 204, in _build_checks

case.create_namelists()

File "/home/mok18003/my_cesm_sandbox/cime/scripts/Tools/../../scripts/lib/CIME/case/preview_namelists.py", line 88, in create_namelists

run_sub_or_cmd(cmd, (caseroot), "buildnml", (self, caseroot, compname), case=self)

File "/home/mok18003/my_cesm_sandbox/cime/scripts/Tools/../../scripts/lib/CIME/utils.py", line 345, in run_sub_or_cmd

getattr(mod, subname)(*subargs)

File "/home/mok18003/my_cesm_sandbox/components/pop//cime_config/buildnml", line 89, in buildnml

mod.buildcpp(case)

File "/home/mok18003/my_cesm_sandbox/components/pop/cime_config/buildcpp", line 241, in buildcpp

pop_cppdefs = determine_tracer_count(case, caseroot, srcroot, decomp_cppdefs)

File "/home/mok18003/my_cesm_sandbox/components/pop/cime_config/buildcpp", line 117, in determine_tracer_count

from MARBL_wrappers import MARBL_settings_for_POP

File "/home/mok18003/my_cesm_sandbox/components/pop/MARBL_scripts/MARBL_wrappers/__init__.py", line 1, in <module>

from MARBL_settings import MARBL_settings_for_POP

ModuleNotFoundError: No module named 'MARBL_settings'

Do I have to install any additional pacakges using pip? Any help would be greatly appreciated