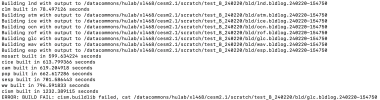

Thanks Jim.For recent gnu compiler versions you will need to add flags

-fallow-argument-mismatch -fallow-invalid-bozto the FCFLAGS

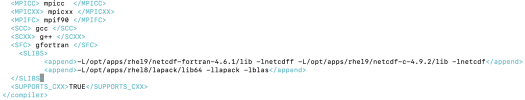

Do you mean I should add this statement to config_compilers.xml?

<ADD_FCFLAGS>-fallow-argument-mismatch -fallow-invalid-boz</ADD_FCFLAGS>

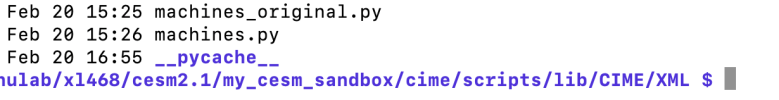

I'm not sure whether I did it correctly:

Could you please give more details on how exactly to add FCFLAGS?

Thanks,

Xiang