Dear all,

I encountered some problems when building the case B1850 on HPC, but the case X has been built and ran sucessfully although it had some warnings during the build. And I have already spent almost two weeks trying to make B1850 work without success.

After running the following for the first time

./create_newcase --case cesmB --res f19_g17 --compset B1850 --mach ahren

./case.setup

./case.build

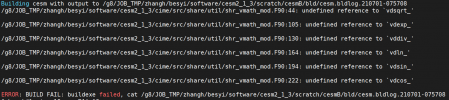

it build failed and I cat the cesm.bldlog.xxx find there are almost like this

After seeking for related posts on the forum, I supposed there are something wrong with my lapack and blas set.Thus I modified .bashrc with

the current export is :

ERROR: BUILD FAIL: buildexe failed, cat /g8/JOB_TMP/zhangh/besyi/software/cesm2_1_3/scratch/cesmB/bld/cesm.bldlog.210630-143855

All bldlog files included the warnings showed on the screen have been attached.

Anyone can help us out here? Thanks a lot in advance.

I encountered some problems when building the case B1850 on HPC, but the case X has been built and ran sucessfully although it had some warnings during the build. And I have already spent almost two weeks trying to make B1850 work without success.

After running the following for the first time

./create_newcase --case cesmB --res f19_g17 --compset B1850 --mach ahren

./case.setup

./case.build

it build failed and I cat the cesm.bldlog.xxx find there are almost like this

After seeking for related posts on the forum, I supposed there are something wrong with my lapack and blas set.Thus I modified .bashrc with

and I appended to SLIBS like thisexport LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/g1/app/mathlib/netcdf/4.4.0/intel/lib/:/g1/app/mathlib/lapack/3.4.2/intel/lib:/g1/app/mathlib/blas/3.8.0/intel

the above operation seems to work cause there were no such 'undifined reference to xxx' in the cesm.bldlog file,but still build failed with error "ifort:error #10236:File not found 'blas_LINUX.a' ". Because the path /g1/app/mathlib/blas/3.8.0/intel of HPC only has a blas_LINUX.a file and no libblas.a,so I installed lapack-3.10.0 and blas-3.10.0 under my work path /g8/JOB_TMP/zhangh/besyi/software/ .<append> -L/g1/app/mathlib/lapack/3.4.2/intel/lib -llapack -L/g1/app/mathlib/blas/3.8.0/intel blas_LINUX.a </append>

the current export is :

and SLIBS:export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/g1/app/mathlib/netcdf/4.4.0/intel/lib/:/g8/JOB_TMP/zhangh/besyi/software/lapack-3.10.0/:/g8/JOB_TMP/zhangh/besyi/software/BLAS-3.10.0/

Then I build B1850,hoping to fix out it,but it doesn't.# the SLIBS part of config_compilers.xml

<SLIBS>

<append> -L/g8/JOB_TMP/zhangh/besyi/software/lapack-3.10.0/ -llapack -L/g8/JOB_TMP/zhangh/besyi/software/BLAS-3.10.0/ -lblas </append>

<append MPILIB="mpich"> -mkl=cluster </append>

<append MPILIB="mpich2"> -mkl=cluster </append>

<append MPILIB="mvapich"> -mkl=cluster </append>

<append MPILIB="mvapich2"> -mkl=cluster </append>

<append MPILIB="mpt"> -mkl=cluster </append>

<append MPILIB="openmpi"> -mkl=cluster </append>

<append MPILIB="impi"> -mkl=cluster </append>

<append MPILIB="mpi-serial"> -mkl </append>

</SLIBS>

ERROR: BUILD FAIL: buildexe failed, cat /g8/JOB_TMP/zhangh/besyi/software/cesm2_1_3/scratch/cesmB/bld/cesm.bldlog.210630-143855

All bldlog files included the warnings showed on the screen have been attached.

Anyone can help us out here? Thanks a lot in advance.