Have you been doing this primarily with a Dockerfile to build the container or some other method? If the former, maybe we can get it under version control for easier collaboration? I was originally planning on doing a from-scratch Singularity recipe file to build my container, and then I just happened to stumble upon this at an opportune time :)

We've got a Github repo here, and collaboration sounds great:

ESCOMP/ESCOMP-Containers

Note that right now, you'll see the CESM directory has quite a few changes from a 'base' CESM install - this is because we wanted to get something out to experiment with, and CESM 2.2 was going through the release process. In the near future, most of those changes -generally focused on providing a 'container' machine config- will be included in CIME, and thus be unnecessary. In short, it'll get a lot less ugly soon, but still, that repo should let you play around.

The plan is to have Singularity recipes in there at some point, too.

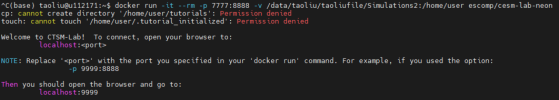

We also have the start of a tutorial repo (for Jupyter Notebooks), but that's probably of less interest to you, I imagine.

GitHub - NCAR/CESM-Lab-Tutorial: Tutorial Jupyter Notebooks for the 'CESM-Lab' environment

Tutorial Jupyter Notebooks for the 'CESM-Lab' environment - NCAR/CESM-Lab-Tutorial

What kind of passthrough? I have experience using GPU passthrough on Singularity for Nvidia GPUs (tensorflow/deep learning), which typically works out of the box with the --nv flag (although the user might need to be in a "video" group for it to work). If memory serves, that worked on the RHEL7 kernel (3.10), but I haven't tested on an earlier kernel. I don't have experience with radeon or other ASIC passthrough, so I'd be less helpful there. The Singularity team does appear to be relatively responsive though, so if you pass along those details I don't mind looking into it.

Ah, I meant pass-through to the underlying network - the MPICH ABI Compatibility Initiative lets you replace one compatible MPI implementation with another at runtime, and Cray machines, for example, can do this automagically with Shifter to use the native CrayMPI runtime. I think when I saw this, they were using Singularity containers, but Shifter is the key thing. So, for example, I can compile a CESM case in a container (with MPICH, no knowledge of a high-speed network like a Cray Aries or even Infiniband), and run it on that Cray, and have the host-level network used. Here's a neat paper showing some of this from Blue Waters:

Container solutions for HPC Systems: A Case Study of Using Shifter on Blue Waters

On Cheyenne (our system), we have SGI MPT, and there's a compatibility mode that also allows for this.. but required a little bit of work to get going, unlike Shifter's automatic use of it. I haven't looked into this in a while, but it's a really great thing for HPC-like use. And on the 'to do' list to check up on again.

Cheers,

- Brian